Creating an artificial intelligence similar to the structure of the human brain carries a range of benefits that drastically outweigh any potential drawbacks. Apart from copying a human’s ability to think creatively, learn rapidly from inconsistent data, and utilise the evolutionary benefits that many traditional artificial intelligences lack, artificial copies of human brains will also allow us to study brain diseases and disorders without the use of an actual, organic patient.

It is most useful for tasks which require visual and auditory signals to complete fully and safely, such as driving a car or holding a realistic face-to-face conversation. A synthetic brain which uses synchronised ‘spikes’ of electricity in a neural network, like an organic one, will be far more able to carry out these tasks than a standard computer. However, this also means that it will suffer the same drawbacks as an organic brain, such as fading memories and a lesser ability to read and utilise data in a logical instead of hierarchal fashion.

In ‘How to Create a Mind’, well-known computer scientist Ray Kurzweil goes into significant detail of the differences between an organic and artificial brain, as well as what can be done to construct artificial brains that have the strengths of both. An extremely simplified version is that human brains store and retrieve data very differently from AIs. However, perhaps the most immediate benefit is it allows machine learning at a far faster and more reliable rate. It is an area of AI research that is rapidly developing – marrying artificial intelligence with synthetic-organic structure. This is because of the effects AI can currently have on business sectors like FinTech: complex, advanced AI has moved from ‘cool Sci-Fi idea’ to ‘realistic business profit increasingly technology’ which (as one can imagine) has generated significantly more interest than there used to be.

More interest means more funding, and so we’re seeing a lot more breakthroughs than before. We’re also seeing more advanced technology in general use and a wider public acceptance of technology too, which doesn’t hurt things either.

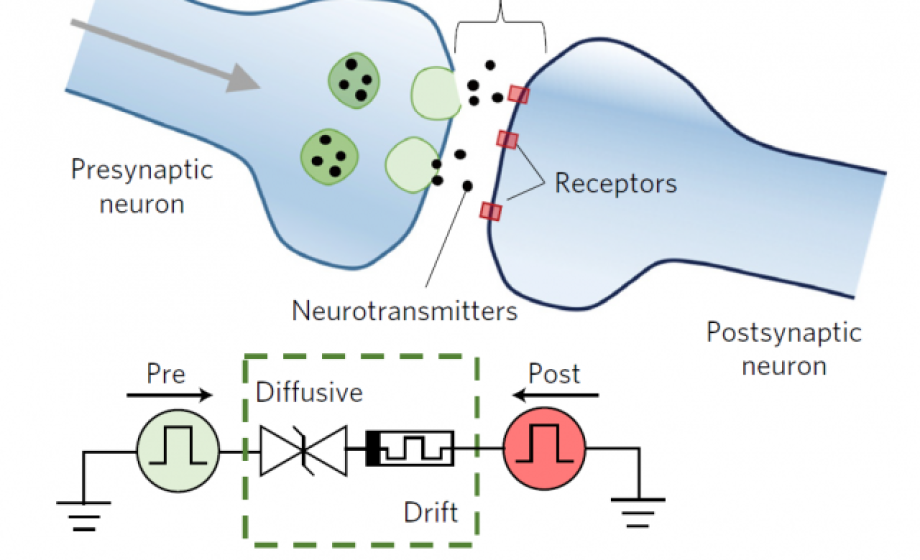

The latest development in this area came earlier this month when AI researchers in Lake Wales, Florida, succeeded in creating a fast-acting, reliable synapse from ferroelectric materials and used them to imitate a biological structure. It works by emulating the plasticity of organic ones – using frequency of signals to reinforce lessons and data that happens over and over or weaken ones that happen very infrequently. The organic brain uses this method to assess what data is important and what data isn’t, and then store or delete it accordingly.

The synthetic synapses created by the researchers do this by using a ferroelectric tunnel junction which changes its connectivity whenever a voltage goes through it, allowing the ferroelectric material placed between the synthetic synapses (which in turn link the artificial, synthetic neurons) to grow stronger or weaker each time learning is taking place depending on its rate of usage.

The artificial synapse is the first step in building full computerised replicas of human brains that can house artificial intelligences. This is part of the reason why this new advancement is so important – it is the foundation from which more researchers can build bigger, better and more complex self-learning networks which can support better machine learning. Researchers from the Moscow Institute of Physics and Technology (MIPT) are already planning to build a synthetic brain based on the real thing – using analog components to make it function more organically, such as the Memristor which, measuring in at 40×40 nanometres, remembers how much current has flowed through it and in which direction via a change in its resistance.

Synthetic synapses have also grabbed headlines this year when researchers at Stanford created part-organic, part-synthetic synapses. Because of the difficulty in creating a single synapse, at present researchers at Sandia National Laboratories in partnership with Stanford University have only made a single one, but have taken 15,000 measurements from their experiments in order to test how a full neural network of these synapses would work. The simulated network was able to recognize handwritten numerical digits with an accuracy of 97 percent.

The reason these networks are so advanced is that unlike traditional computers which can only have two states – 0 and 1 – these new networks can have at least 500 states, and possibly more as the technology develops.

The downside to this technology so far is that it takes around 10,000 times the power to fire as a biological one does. However, considering this technology is still in its infancy, this is expected to be resolved as it develops. It also pales in comparison to what this new technology can achieve. Neural networks with machine learning algorithms can already compose music, understand the concept of law & order and even, with a new AI developed by Facebook, identify potentially suicidal behavior in people and then reach out with an appropriate offer of help. These complex behaviors are all thanks to the increasing complexity of neural networks and the ability of machines to learn from the world around them. With Memristors, this learning process is amplified significantly. This technology will allow us to access even more amazing feats of artificial intelligence which will improve, and even save, lives.

We may only be in May, but with this many major breakthroughs in 2017 alone, we should be expecting a huge year from artificial intelligence research.