| IN A NUTSHELL |

|

In a groundbreaking development, researchers at RMIT University have pioneered an innovative neuromorphic device that mimics the human brain’s information processing capabilities. Utilizing ultra-thin layers of molybdenum disulfide (MoS₂), this technology replicates neuron functions, paving the way for significant advancements in autonomous vehicles and robotics. These devices are not just about mimicking the brain; they promise to revolutionize how machines interact with their environments, providing real-time vision and memory capabilities without the need for extensive computational power. This innovation is poised to transform industries by enhancing the speed and efficiency of automated systems.

Brain-like Sensing

The field of neuromorphic vision and information processing is expanding rapidly, aiming to create intelligent and efficient computing systems. Central to this innovation are spiking neural networks (SNNs), which mimic real brain cells by sending out signals when triggered. A popular model in this domain is the leaky integrate-and-fire (LIF) model, which simulates how neurons accumulate and release electrical signals. This model is crucial for developing systems that can process visual tasks in a manner akin to human perception.

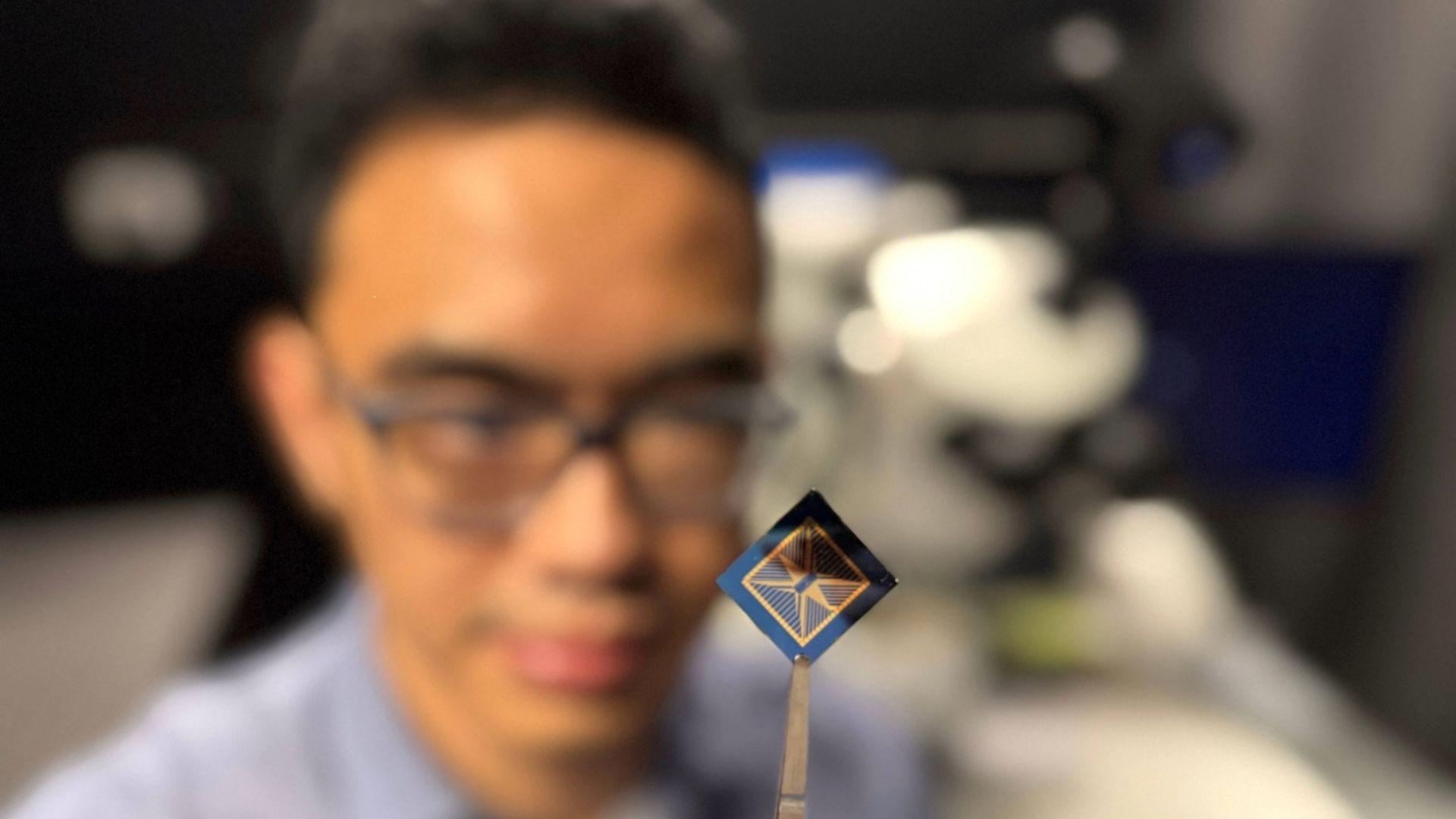

RMIT University researchers have successfully combined neuromorphic materials with advanced signal processing techniques to produce a device that captures and processes visual information in real time. At the heart of this development is molybdenum disulfide (MoS₂), a metal compound capable of detecting light and converting it into electrical signals. This ability allows the device to emulate the electrical behavior of neurons, thus storing and resetting its state as needed. The potential applications of this technology are vast, ranging from advanced robotics to improved autonomous vehicle navigation.

Smart Visual Sensing

The researchers have constructed a spiking neural network model utilizing the light-responsive properties of MoS₂. This model demonstrated impressive accuracy in image tasks, achieving 75% accuracy on static images after 15 training cycles and 80% on dynamic tasks after 60 cycles. Such capabilities highlight the potential of this technology in real-time vision processing.

In practical tests, the device used edge detection to identify hand movements, bypassing traditional frame-by-frame capture methods. This approach drastically reduces data and power requirements while storing these changes as memories, closely mimicking brain function. The current work in the visible light spectrum expands on previous research conducted in the ultraviolet range. This innovation is set to enhance the responsiveness of autonomous vehicles and robots, enabling them to better navigate complex and rapidly changing environments.

Applications and Future Directions

The MoS₂-based device holds the promise of revolutionizing how machines process visual input, particularly in high-risk or dynamic settings. By instantly detecting environmental changes with minimal data processing, this technology ensures faster and more efficient reactions. Its applications extend into areas such as manufacturing and personal assistance, where enhanced human-robot interactions are vital.

The research team is currently working on scaling the single-pixel prototype into a larger pixel array, with new funding supporting these efforts. Future plans involve optimizing the device for more complex vision tasks, enhancing energy efficiency, and integrating it with existing digital systems. Additionally, the exploration of other materials could expand the device’s capabilities into the infrared spectrum, opening up possibilities for emission tracking and smart environmental sensing.

Implications for Autonomous Technology

This innovation represents a significant leap forward in the development of autonomous technology. By mimicking the brain’s ability to process and store visual information, the MoS₂ device offers a more efficient solution for real-time vision processing in autonomous vehicles and robotics. This advancement not only promises improved safety and performance in these fields but also lays the groundwork for future developments in artificial intelligence and machine learning.

The findings of this research, published in Advanced Materials Technologies, underscore the potential of brain-like devices to transform how machines interact with their surroundings. As this technology continues to evolve, it raises intriguing questions about the future of autonomous systems and their role in society.

As researchers continue to refine and expand upon this technology, the potential applications seem limitless. From enhancing autonomous vehicles to improving human-robot interactions, the implications of this breakthrough are profound. How will this brain-like technology reshape the landscape of automation and artificial intelligence in the coming years?

Did you like it? 4.3/5 (30)

Wow, this sounds like the start of a sci-fi movie! Are we sure these robots won’t become our new overlords? 🤖